If you consume online content (and if you are alive in 2022, you probably do), chances are that you’ve watched quite a few live streams. Be it online classes, sporting events, fitness lessons, or celebrity interactions, live streaming has quickly become the go-to source of learning and entertainment.

Live streamers comprised over 1/3rd of all internet users in March and April 2020, with only 1 in 10 people in the US and UK streaming live content of their own. Just two years since, almost 82% of internet use is expected to be devoted to streaming video by 2022.

A vast majority of live streaming applications are built on a protocol called HTTP Live Streaming, or HLS. In fact, if you’ve ever watched an Instagram live stream or tuned into the Super Bowl on the NBC Sports App, chances are, you’ve been touched by the magical hands of HLS.

So if you are looking to build that kind of sophisticated live streaming experience inside your app, this article should give you a comprehensive understanding of the HLS protocol and everything in it.

Read on to learn the basics of HLS, what it is, how it works, and why it matters for live streamers, broadcasters, and app developers.

What is HLS? HLS stands for HTTP Live Streaming. It is a media streaming protocol designed to deliver audio-visual content to viewers over the internet. It facilitates content transportation from media servers to viewer screens — mobile, desktop, tablets, smart TVs, etc.

Created by Apple, HLS is widely used for distributing live and on-demand media files. For anyone who wants to adaptively stream to Apple devices, HLS is the only option. In fact, if you have an App Store app that offers video content longer than 10 minutes or is heavier than 5MB, HLS is mandatory. You also have to provide one stream, at the very least, that is 64 Kbps or lower.

Bear in mind, however, that even though HLS was developed by Apple, it is now the most preferred protocol for distributing video content across platforms, devices, and browsers. HLS enjoys broad support among most streaming and distribution platforms.

HLS allows you to distribute content and ensure excellent viewing experiences across devices, playback platforms, and network conditions. It is the ideal protocol for streaming video to large audiences scattered across geographies.

A little history HLS was originally created by Apple to stream to iOS and Apple TV devices, Mac on OS X in Snow Leopard, and later OSes.

In the early days of video streaming, Realtime Messaging Protocol (RTMP) was the de-facto standard video protocol for streaming video over the internet. However, with the emergence of HTML5 players that supported only HTTP-based protocols, RTMP became inadequate for streaming.

With the rising dominance of mobile and IoT in the last decade, RTMP took a hit due to its inability to support native playback in these platforms. The Flash Player has to give away ground to HTML5, which resulted in a decline in Flash support across clients. This further contributes to RTMP’s unsuitability for modern video streaming.

Read More: RTMP vs WebRTC vs HLS: Battle of the Live Video Streaming Protocols

In 2009, Apple developed HLS, designed to focus on the quality and reliability of video delivery. It was an ideal solution for streaming video to devices with HTML5 players. Its rising popularity also had much to do with its unique features, listed below:

Adaptive Bitrate Streaming

Embedded closed captions

Fast forward and rewind

Timed metadata

Dynamic Ad insertion

Digital Rights Management(DRM)

## How does HLS work? HLS has become the default way to play video on demand. Here’s how it works: HLS takes one big video and breaks it into smaller segments (video files) whose length varies, depending on what Apple recommends.

Here’s an example:

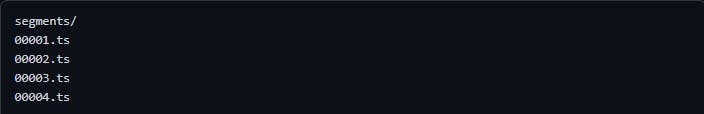

Let’s say there is a one-hour-long video, which has been broken into 10-second segments. You end up with 360 segments. Each segment is a video file ending with .ts. For the most part, they are numbered sequentially, so you end up with a directory that looks as seen below:

The video player downloads and plays each segment as the user is streaming the video. The size of the segments can be configured to be as low as a couple of seconds. This makes it possible to minimize latency for live buffering use cases. The video player also keeps a cache of these segments in case it loses network connection at some point.

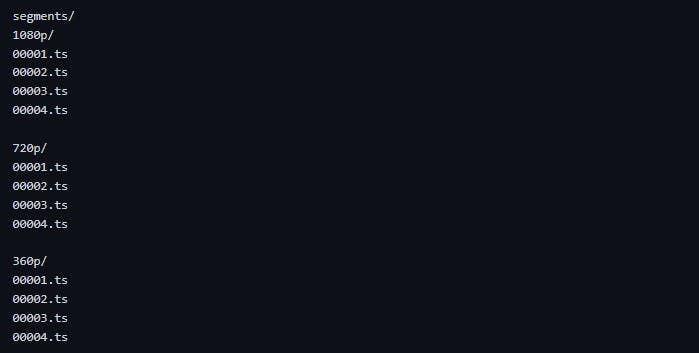

HLS also allows you to create each video segment at different resolutions/bitrates. Take the example above. In this, HLS lets you create:

Here’s what the directory looks like now:

Once these segments are created at different bitrates, the video player can actually choose which segments to download and play, depending on the network strength and bandwidth available. That means if you are watching the stream at lower bandwidth, the player picks and plays video segments at 360p. If you have a stronger internet connection, you get the segments at 1080p.

In the real world that means the video doesn’t get stuck, it just plays at different quality levels. This is called Adaptive Bitrate Streaming (ABR).

## What Adaptive Bitrate Streaming means in the real world Imagine you’re streaming the Super Bowl live on your phone (because you just had to drive out of town that day). Just as the Rams are racing towards their winning touchdown, you hit a spot of questionable network in the Nevada desert.

You’d think that means the livestream would basically stop working because your network strength has dropped. But, thanks to ABR, that wouldn’t be the case.

Instead of ceasing to work, the steam would simply adjust itself to the current network. Let’s say you were watching the stream at 720p. Now, you’d get the same stream at 240p. That means, even though there is a drop in video quality, you would still be able to see Cooper Kupp take his MVP-winning touchdown. HLS would enable this automatically, simply by just adjusting to a lower quality broadcast to match your network.

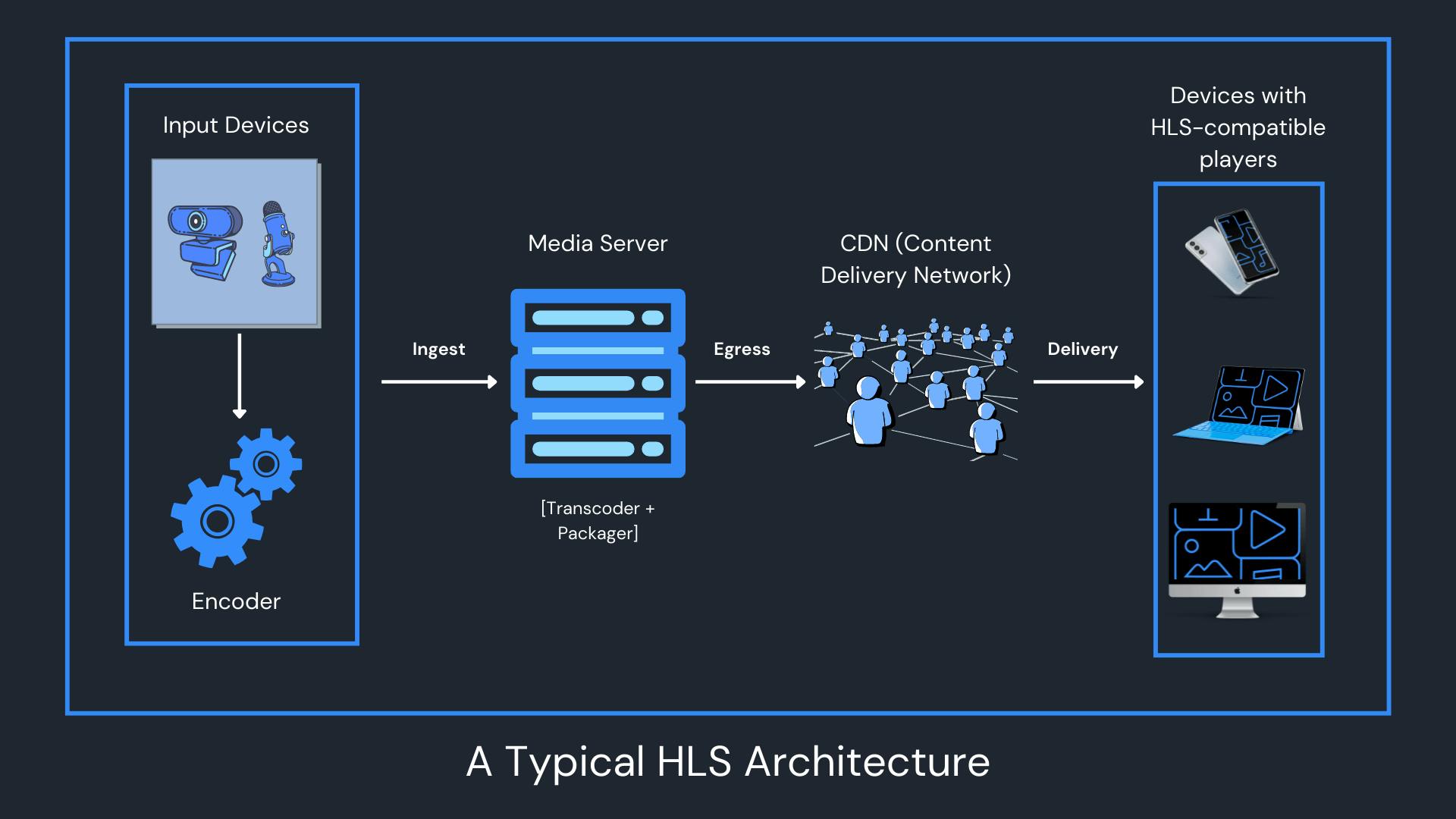

## HLS Streaming Components Three major components facilitate an HLS stream:

The Media Server,

The Content Delivery Network, and

The Client-side Video Player

HLS Server (Media Server) Once audio/video has been captured by input devices like cameras and microphones, it is encoded into a format that video players can translate and utilize: H.264 for video and AAC or MP3 for audio.

The video is then sent to the HLS server (sometimes called the HLS streaming server) for processing. The server performs all the functions we’ve mentioned — segmenting video files, adapting segments for different bitrates, and packaging files into a certain sequence. It also creates index files that carry data about the segments and their playback sequence. This is information the video player will need to play the video content.

Content Delivery Network (CDN) With the volume of video content to store, queue, and process, a single video server responding to requests from multiple devices would likely experience immense stress, slow down and possibly crash. This is prevented by using Content Deliver Networks (CDNs).

A CDN is a network of interconnected servers placed across the world. The main criteria for distributing cached content (video segments in this case) is the closeness of the server to the end-user. Here’s how it works:

A viewer presses the play button, and their device requests the content. The request is routed to the closest server in the CDN. If this is the first time that particular video segment has been requested, the CDN will push the request to the origin server where the original segments are stored. The origin server responds by sending the requested file to the CDN server.

Now, the CDN server will not only send the requested file to the viewer but also cache a copy of it locally. When other viewers (or even the same one) request the same video, the request no longer goes to the origin server. The cached files are sent from the local CDN server.

CDN servers are spread across the globe. This means requests for content do not have to travel countries and continents to the origin server every time someone wants to watch a show.

HTML5 Player To view the video files, end-users need an HTML5 player on a compatible device. Ever since Adobe Flash passed into the tech graveyard, HLS has become the default delivery protocol. Getting a compatible player won’t be a challenge since most browsers and devices support HLS by default.

However, HLS does provide advanced features which some players may not support. For example, certain video players may not support captions, DRM, ad injection, thumbnail previews, and the like. If these features are important to you, make sure whichever player you choose supports them.

Resolving Latency Issues in HLS Apple recommended batches of 10-second segments until 2016. That particular spec focused on loading three video segments before the player could start the video. However, with 10-second segments, content distributors suffered a 30-segment latency before playback could begin. Apple did eventually cut down the duration to 6 seconds, but that still left streamers and broadcasters with noticeable latency. Even since then, reducing segment size has been a popular way to drive down latency. By ‘tuning’ HLS with shorter chunks, you can accelerate download times, which speeds up the whole pipeline.

In 2019 Apple released its own extension of HLS called Low Latency HLS (LL-HLS). This is often referred to as Apple Low Latency HLS (ALHLS). The new standard not only came with significantly lower latency but was also compatible with Apple devices. Naturally, this made LL-HLS a massive success and has been widely adopted across platforms and devices.

LL-HLS comes with two major changes to its spec which are largely responsible for reducing latency. One is to divide the segments into parts and deliver them as soon as they’re available. The other is to ensure that the player has data about the upcoming segments even before they are loaded.

A detailed breakdown of LL-HLS is beyond the scope of this article. However, you can find a structured deep-dive into the protocol in this Introduction to Low Latency Streaming with HLS.

## When to use HLS Streaming

When delivering high-resolution videos with over 3MB: Without HLS, viewing such content usually leads to sub-par user experiences, especially when the user is on an average internet and/or mobile connection.

When broadcasting live video from one to millions to reach the broadest audience possible: Not only is HLS supported by most browsers and operating systems, but it also offers ABR, which allows content to be viewed at different network speeds (Cellular, 3G, 4G, LTE, WIFI Low, WIFI High).

When reducing overall costs: HLS reduces CDN costs by delivering the video at optimal bitrate to viewers.

When using advanced features in your stream: With HLS, you can leverage ad insertion, DRM, closed captions, adaptive bitrate, and much more.

When you expect your audience to use Apple devices: HLS enjoys support across devices, but Apple devices support HLS over MPEG-DASH and other alternatives. Additionally, apps on the App Store with over 10-minute videos are required to use HLS.

- When you are concerned with security: HLS power video on demand with encryption (DRM), which helps reduce and avoid piracy.

## Major Advantages of HLS

- Transcoding for Adaptive Bitrate Streaming

We’ve already explained what ABR is in a previous section. Transcoding here refers to altering video content from one bitrate to another. In the above example, video segments are converted to 1080p, 720p, and 360p from a single, high-resolution stream.

In the HLS workflow, the video travels from the origin server to a streaming server with a transcoder. The transcoder creates multiple versions of each video segment at different bitrates. The video player picks which version works best with the end-user’s internet and delivers the video accordingly.

- Delivery and Scaling

With HLS, it is much easier to broadcast live video from one to millions. This is because most browsers and OSes support HLS.

Since HLS can use web servers in a CDN to push media, the digital load is distributed among HTTP server networks. This makes it easy to cache audio-video chunks, which can be delivered to viewers across all locations. As long as they are close to a web server, they can receive video content.

## Does 100ms support HLS for live streaming? 100ms supports live streaming output via HLS. However, we use WebRTC as input for the stream, unlike services that offer the infrastructure for live streaming alone, which generally use RTMP.

The 100ms live streaming stack combines streaming with conferencing, enabling our customers to build more engaging live streams that support multiple broadcasters, interactivity between broadcaster and viewer, and easy streaming from mobile devices. Since the entire audio/video SDK is packaged into one product, broadcasters can freely toggle between HLS streams and WebRTC, thus allowing two-way interaction while live streaming.