Internet users today expect flawless online experiences, be it browsing Instagram, streaming BTS, or debating anime fandoms. This expectation extends to online video communication.

If they are to meet the expectations of contemporary users, video conferences must offer sub-second latency and high-quality audio/video transmission. Usually, developers choose WebRTC to build video experiences of this caliber.

You might’ve read or heard about how WebRTC is a client-oriented protocol that usually doesn’t require any server to function. However, that’s not the whole story.

## The Server in WebRTC It is true that some WebRTC calls are possible without any need for an external server. But, even in those cases, a signaling server is required to establish the connection.

Most calls would simply fail on a direct connection and even if they do connect, issues start arising as more peers join in — something we will discuss later in the article. To solve these issues, it is recommended that you use different servers that provide workarounds and call performance optimization in WebRTC ecosystems.

This article will discuss a few elements on the server side you must consider when building a WebRTC solution. We will talk about the servers, multi-peer WebRTC architecture, and how it all works — so that you make the right architecture choices for your WebRTC application.

The following is the list of servers explored in this piece. Some of these servers are mandatory while the presence of others will depend on the architecture you choose to work with:

Signaling Server (Mandatory)

STUN/TURN Servers (Mesh Architecture)

WebRTC Media Servers

- MCU Server (Mixing Architecture)

- SFU Server (Routing Architecture)

- SFU Relay (Routing Architecture)

Signaling Server

Signaling refers to the exchange of information between peers in a network. It is required to set up, control, and terminate a WebRTC call. WebRTC doesn’t specify a rigid way for signaling peers, which makes it possible for developers to manage signaling as they see fit. To implement this out-of-band signaling, a dedicated signaling server is often used.

The signaling server is mainly used to initiate a call. Once that is done, WebRTC will take over. However, this does not mean that we won’t require the signaling server once the call has started.

Even though most state changes like voice mute/unmute can be notified to the other peer(s) through WebRTC data channels, the signaling server has to be present throughout the call. It must be used to handle unusual scenarios like network disconnection where the peer requires signaling to reconnect to the call again.

Now, we will take a look at the multi-peer architecture in WebRTC, before moving on to the servers we might need once signaling is complete.

## WebRTC multi-peer architecture In WebRTC, there are multiple architectures that define how the peers are connected in a call. Generally, the server-side requirements depend on the architecture that you choose. Picking the right architecture for your use case helps identify the servers you will need.

We will now take a look at the most popular WebRTC architectures:

Mesh architecture

Mixing architecture

Routing architecture

Below, we discuss these architectures along with the servers they need to function properly.

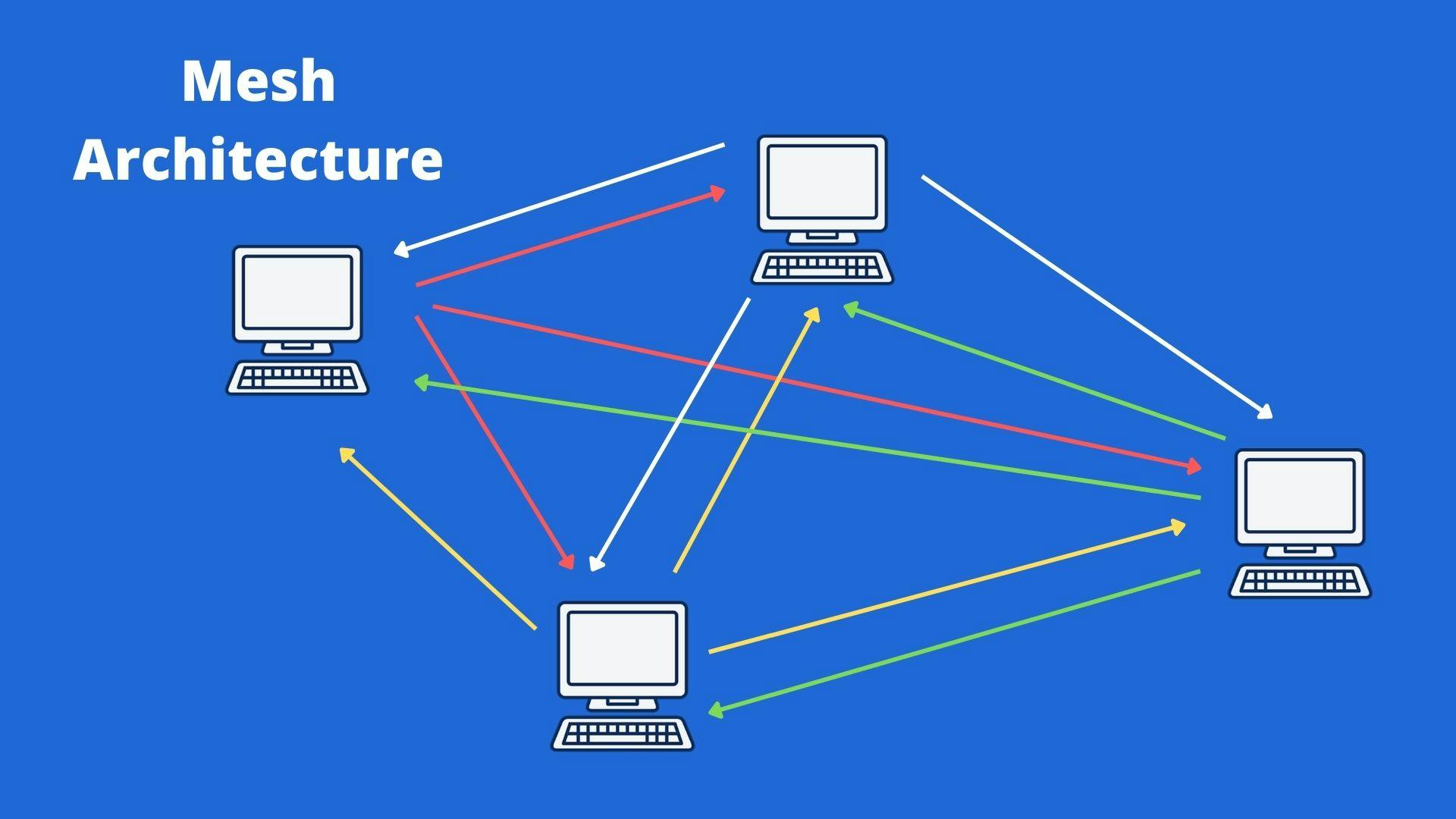

Mesh Architecture (STUN/TURN Servers)

In this architecture, every peer is directly connected to every other peer in the call. For example, in a call with 4 peers, every peer has to send their video to 3 other peers and receive video from the same 3.

This is generally suitable for WebRTC calls with a limited number of peers.

NAT restrictions and Firewall:

A NAT(Network Address Translation) router is used to map the private IP address to a public IP address under its network. When a peer is behind a NAT router, it only has knowledge of its private IP address (which is invalid outside its local network). Thus, it cannot exchange its actual public IP address during signaling phase.

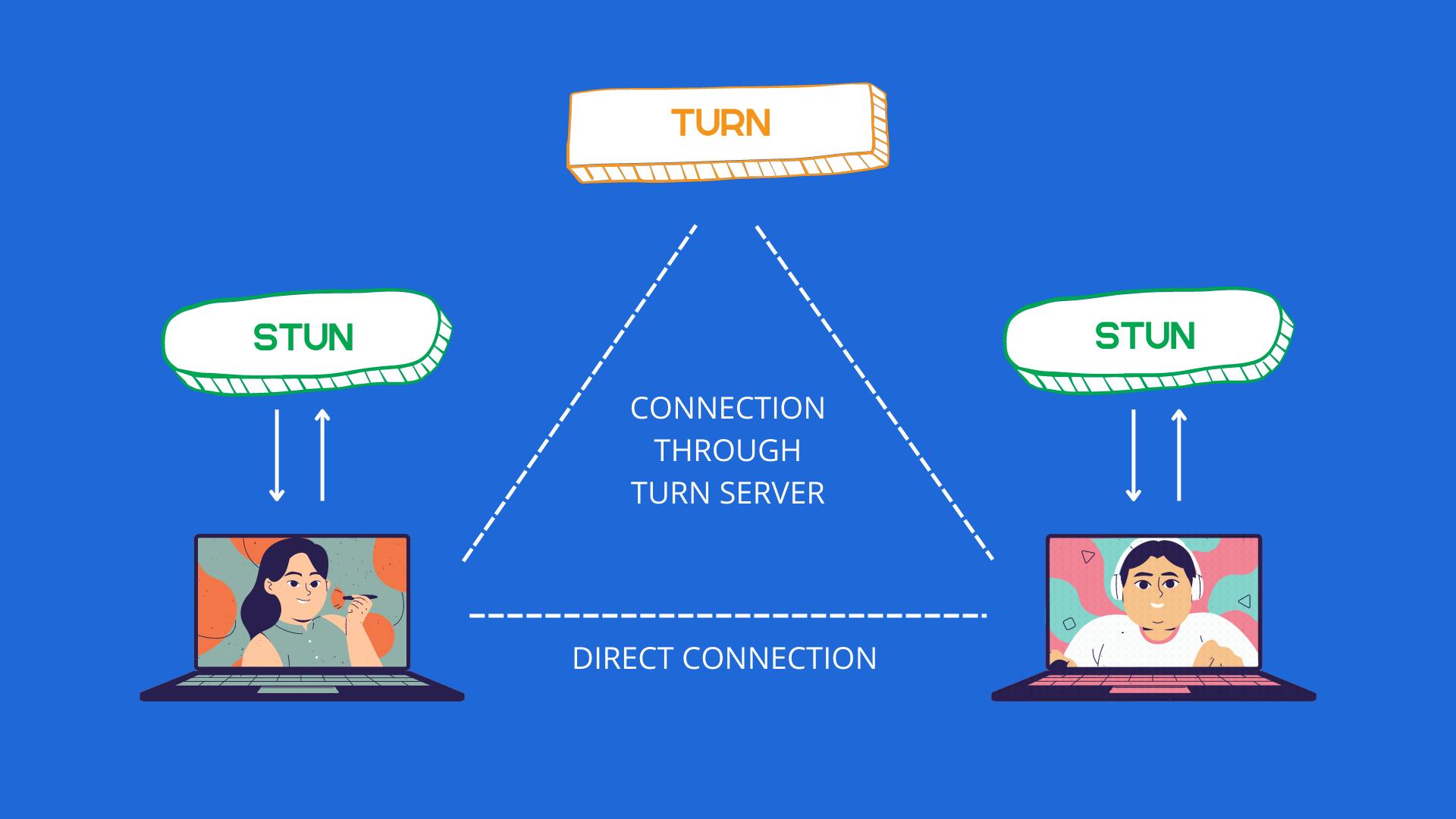

Additionally, when the peers are behind Symmetric NAT(a type of NAT) router, it becomes impossible for the peer to connect directly due to its unique mapping technique that returns different port addresses for different connections in the network. In some cases, the Firewall on a peer’s device might block a direct connection with another peer over the internet for security reasons. When a direct connection is not possible in this architecture, NAT traversal servers like the STUN and TURN server can be used.

Session Traversal Utility for NAT (STUN) A STUN server is used to retrieve the public IP address of a device behind NAT. This allows the device to communicate after learning its address on the internet. This is enough for roughly 80% of connections to be successful, but it cannot be used for cases where the peers are behind a Symmetric NAT.

Traversal Using Relay NAT (TURN) A TURN server is used to relay media between peers when a direct connection is not possible — often due to a Symmetric NAT in the network or a firewall blocking connections.

The TURN server is also known as the “relay” server and costs more than STUN to maintain because it relays media throughout a WebRTC connection. Since the TURN server is an extension of STUN, its implementations include a STUN server built into it by default.

For more details on STUN/TURN servers and how to use them in a simple WebRTC video app, have a look at Build your first WebRTC app with Python and React.

## Advantages and Disadvantages of Mesh Architecture

Advantages

No need for a central media server as the connection is peer-to-peer. This reduces server costs.

Relatively simple to implement in WebRTC.

Disadvantages

Each participant has to send media to every other peer, which requires N-1 uplinks & N-1 downlink.

Not much control over the media quality.

Exploring the Mixing and Routing architectures requires some familiarity with the idea of WebRTC Media Servers. So let’s start with that.

WebRTC Media Servers

In the Mesh architecture, bandwidth expenditure becomes quite high for peers when the number of people in the call exceeds 4. The resource consumption tends to skyrocket, overheating the peer devices to the point that they can malfunction or even crash.

Therefore, for use cases with more than 4 people in a call, it is recommended that you choose an architecture based around a media server.

WebRTC Media Servers are central servers that peers send their media to, and receive processed media from. They act as “multimedia middleware” and can be used to offer several benefits. But, trying to implement one from scratch isn’t exactly a walk in the park.

Even if we use a media server, we must ensure that they’re available to the peers via TCP with the help of a TURN server sometimes.

A well-implemented media server is highly optimized for performance and can offer numerous capabilities outside its main requirement. Here are some useful features an ideal media server should have:

Simulcast

Video is served to the peers at different bitrates based on their configuration or network conditions. The peers send their video at multiple resolutions and bitrates to the media server and it chooses which version to send to each peer.

Recording

Call recording is made possible either by directly forwarding all incoming media from the server to storage or by connecting a custom peer to the server that receives all media streams and stores them.

Transcoding

Not all peers connected to the call might support the same audio/video codec. The media server should be able to resolve this issue by transcoding the audio/video to an appropriate codec supported by all, before sending the streams out.

Audio/Video Optimisations

Customized audio/video optimization should be possible. The server should be able to send only the media of active speakers to reduce bandwidth consumption, selectively mute audio from someone, or prioritize screen-share media over other videos.

Here are some of the widely used media servers:

- MCU (Multipoint Control Unit)

- SFU (Selective Forwarding Unit)

- SFU Relay (Distributed SFU)

Now, let’s discuss the architectures (Mixing and Routing) that use these media servers to solve issues commonly faced in the Mesh architecture — high bandwidth expenditure and heavy resource consumption.

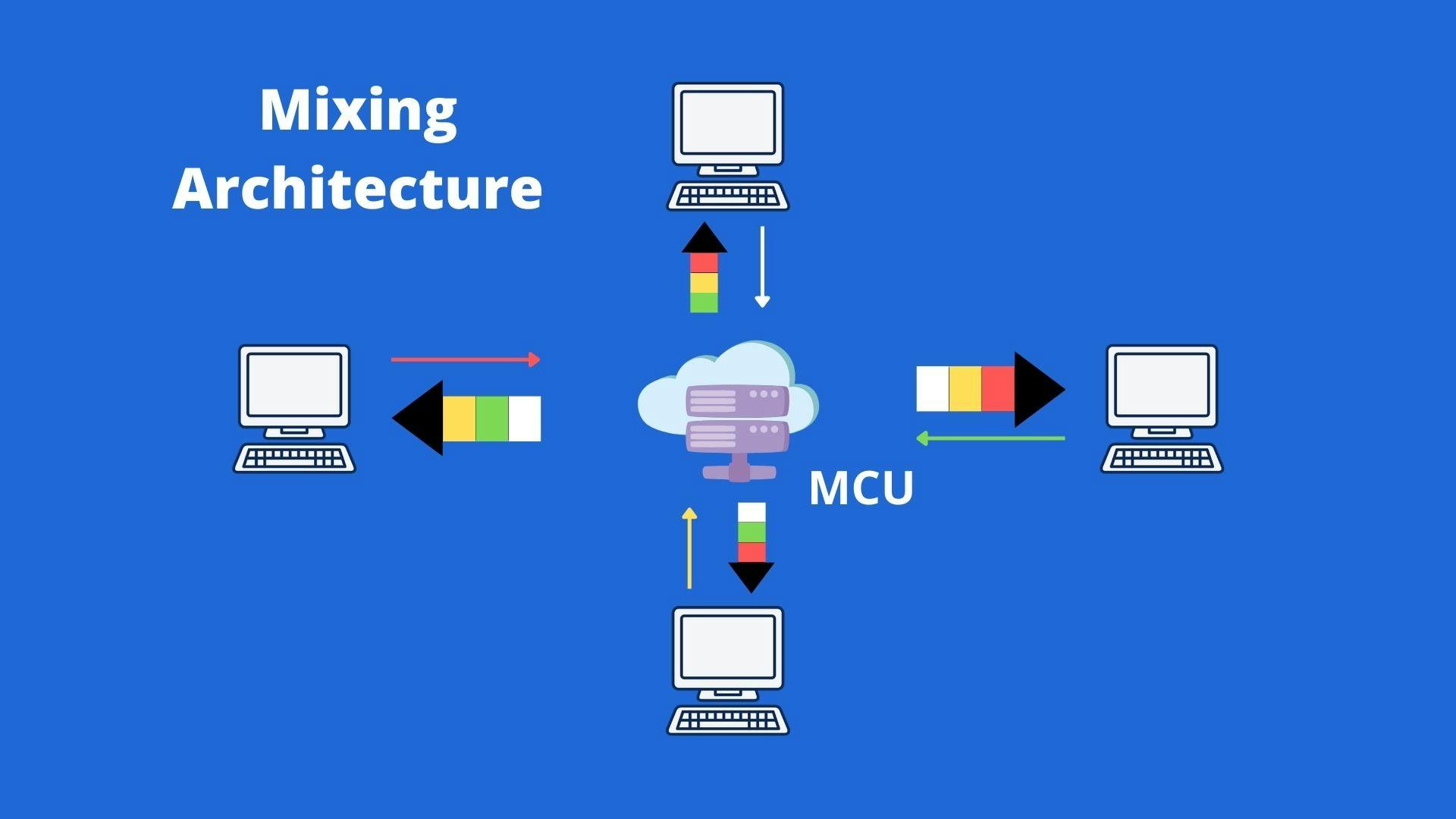

Mixing Architecture (using the MCU Server)

In this setup, all peers send their media to a central media server. Then, the media server operates on the media gathered, packs it into a single stream, and sends it to all peers. Here, every peer sends a single media stream to and receives one media stream from the server. The media server used here is called Multipoint Control Unit (MCU).

Multipoint Control Unit (MCU) An MCU server receives media from all peers and reworks it, performing the following functions:

Decoding: Upon gathering the primary media streams from all peers, the MCU decodes them.

Rescaling: The decoded videos are rescaled based on the peer’s network conditions.

Composing: The rescaled videos are combined into a single video stream in a layout requested by that peer.

Encoding: Finally, the video stream is encoded for delivery to the peer.

This process is done parallelly for every single, separate peer in the call. This makes it easy for peers to send and receive media as a single stream without spending too much bandwidth.

Advantages and Disadvantages of Mixing

Advantages

The server sends a single media stream to the peer, which makes it possible for devices with lower processing power to participate in the call.

Requires very little resource or bandwidth due to the peer having just a single uplink and downlink.

Server-side recording is possible.

Disadvantages

The server requires high processing power and is generally costly to maintain.

Peers may experience delays in receiving media packets, as they have to be processed before sending from the server.

While this sounds like a great option, the number of peers in a call directly depends on the performance of the MCU in the Mixing architecture. In reality, it is hard to maintain a WebRTC call with more than 30 peers in Mixing architecture, without the MCU heavily draining server resources.

Despite this, Mixing remained the most widely used WebRTC architecture until a few years back. However, it has been slowly replaced by the Routing architecture.

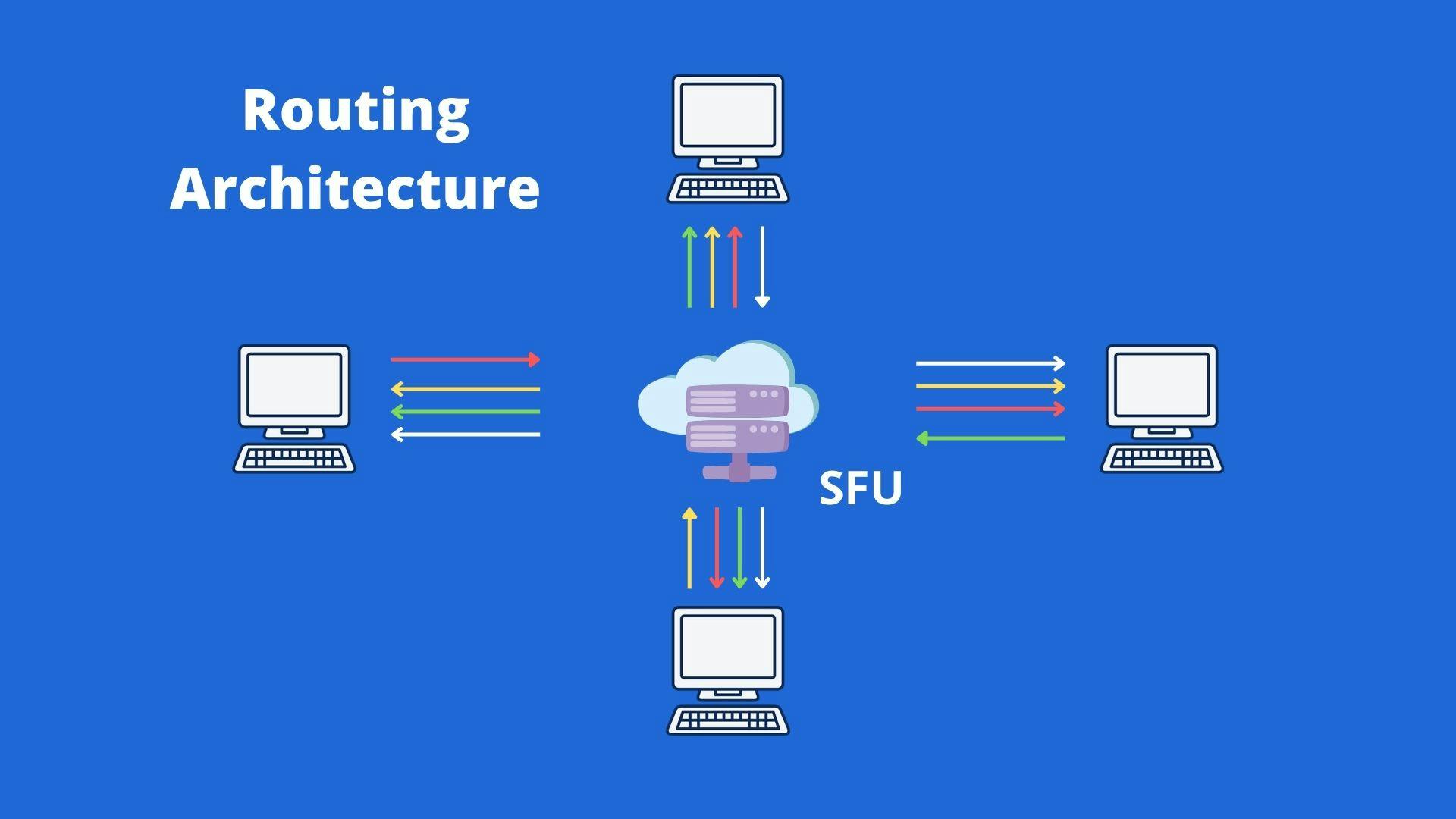

Routing Architecture (SFU Server) In this setup, all peers send their media to a central media server. The server forwards the media streams to all other peers separately, without operating on them in any way. Here, every peer sends a single media stream to the server and receives N-1 media streams (where N is the number of peers present in the call) from the server. The media server used here is called the Selective Forwarding Unit (SFU).

Selective Forwarding Unit (SFU) An SFU server receives media from all peers in a call. Then, all that media is routed “as is” to every other peer connected to the server. The peers can send more than one media stream to the server, making simulcast possible. The SFU can also be customized to automatically decide which media stream to send to a specific peer.

## SFU Relay (Distributed SFU)

This is a fairly recent development in the domain. SFU Relay servers are simply SFU servers that can communicate with each other. One SFU server can relay media to another, creating a distributed SFU structure. This reduces the load on a single SFU and makes the whole network more scalable. In theory, any server can connect to the SFU relay via an API and receive the routed media.

Advantages and Disadvantages of Routing

Advantages

Less demanding on server resources compared to options like MCU.

Works with asymmetric bandwidth (lower upload rate than download rate) for a peer, as there is only a single uplink with N-1 downlink.

Simulcast is supported for different resolutions.

Disadvantages

Server-side recording is not possible. But it is possible to route media to a peer that records the streams.

The peer device must be good enough to handle multiple downlinks, unlike in Mixing architecture.

It requires complex design and implementation on the server side.

Closing Notes

To wrap up, let’s quickly summarise the architectures and corresponding use cases discussed above:

Mesh Architecture with a STUN/TURN server is ideal for calls with 4 or fewer peers.

Mixing Architecture with an MCU server is good for calls with more than 4 peers. It is highly used in cases where support for legacy devices is a necessity.

Routing Architecture with an SFU server is the modern approach to WebRTC video conferencing. As of now, this is the ideal approach to connecting peers in a call when their number exceeds the limits of the Mesh architecture.

It is also possible to dynamically switch between different architectures based on the call size, so as to find a balance between app performance and server costs.

Choosing and implementing the appropriate WebRTC server and architecture is just one side of the coin. Much more is required to make your WebRTC service reliable — optimizing performance, reducing call failure rate, and handling edge cases.

If you’re planning on writing your own media server, you should also be aware of some basic problems that often show up in the process:

Managing peers with a bad network connection.

Helping peers that cannot support all the mixed codecs running in the call.

Handling peer reconnections as well as new peers joining in and existing peers leaving the call.

Algorithms to perform bandwidth estimation for a peer so that the server does not send more data than it can handle.

In short, even if you choose the right server-side architecture, you will still have a lot to deal with regarding the technicalities of WebRTC.

If you don’t want to deal with the intricacies of WebRTC but still want to host calls with a gold standard video solution (be video conferencing or streaming), you have options like 100ms to do the heavy lifting for you.

WebRTC with 100ms

100ms’ live video SDKs allow you to add live video capabilities to your application with just a few lines of code. With multiple highly-relevant features and a predictable pricing plan, you don’t have to worry about dealing with exorbitant server costs for your app.

If your application requires high-quality video capabilities but you’re unsure about building it from scratch, or you just don’t want to deal with fine-tuning the nitty-gritty of WebRTC, 100ms is your best bet.

Further Reading

Build your first WebRTC app with Python and React